Several videos are currently circulating in various AI groups that may represent another milestone in the generative AI video world.

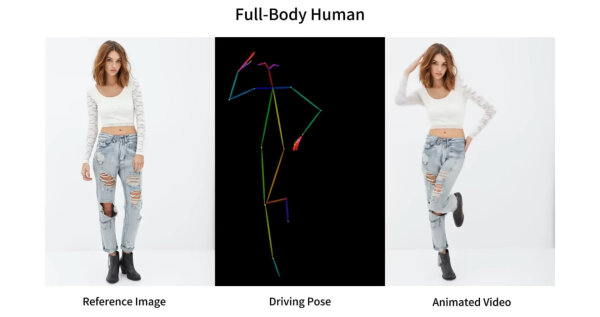

In short, the video is based on a new AI model that makes it possible to create an animated video from a still image using vector poses. It's best to watch the project video for yourself:

However, there are still a lot of doubts on the net that everything is above board here - because there has never been such a realistic animation of photorealistic source material before. And the project has not yet published any code.

However, the  already available paper may already provide the decisive clue as to how the extremely good and consistent quality can be achieved:

already available paper may already provide the decisive clue as to how the extremely good and consistent quality can be achieved:

"To maintain the consistency of complicated appearance features from the reference image, we develop ReferenceNet to merge detail features through spatial attention. To ensure controllability and continuity, we introduce an efficient pose guider to control the character's movements and employ an effective temporal modeling approach to ensure smooth transitions between video frames. By extending the training data, our approach can animate arbitrary characters, leading to better character animation results compared to other frame-to-video methods."

Simply put, the model must be re-trained, or "finetuned", with the desired output models before use. At least that's how you could understand "expanding the training data".

However, it shouldn't be too long before the community gets access to the code via  Github project page. And then we will see whether it will soon be possible to animate photorealistic material using a simple digital manikin.

Github project page. And then we will see whether it will soon be possible to animate photorealistic material using a simple digital manikin.