[11:00 Sun,5.December 2021 by Thomas Richter] |

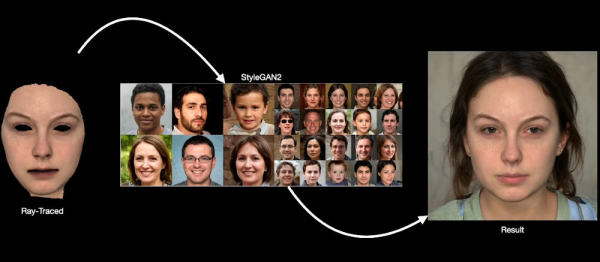

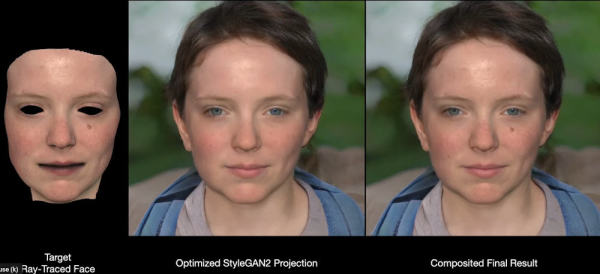

We&ve already reported on Nvidia&s Style GAN deep learning algorithm for  Combination of rendered face with GANStyle2 In this way, faces can now be digitized and simulated in very high quality by means of multi-camera recordings, but only the skin and the three-dimensional profile of the face, not the whole head - apart from the hair, the eyes and the interior of the mouth are missing in these models.  CGI 3D face model without hair, eyes and mouth Therefore, when using these face models in movies, a lot had to be retouched and supplemented by hand afterwards in an extremely elaborate way to get a realistic animated head, like for example in Star Wars the re-animation of the meanwhile deceased actors Peter Cushing (Grand Moff Tarkin) or Carrie Fisher (Princess Leia) in short scenes.  Combination of rendered face with GANStyle2 The new project now seeks to automate this final step via AI to combine high quality CGI facial renderings with the strengths of Deep Learning generated faces using github. com/NVlabs/stylegan2 (StyleGAN2) to combine them into an extremely real-looking virtual face - the latter have so far still suffered from the relatively low resolution (currently 1024×1024) of the faces generated by them, which is still far below that required for rendering in movies, and a roft lack of coherence across sequences of images, but shone in contrast to the CGI faces with their representation of hair, eyes and mouth.  rendered faces compared with GANStyle2 generated faces. For this purpose, the skin surface generated by ray tracing is added to the face generated by StyleGAN2, including the head, by compositing. The new method also allows a realistic change of the lighting of the generated faces including eyes , hair and mouth with correct shadow casting:  Combination of rendered face with GANStyle2 with different light incidence. Also more complex changes in ambient lighting in terms of direction and color - the AI algorithm adds eyes, hair to match the rendered face:  Combination of rendered face with GANStyle2 with different light situation. And, very important for the task Disney is targeting: the results also look realistic when animated, for example when changing from one facial expression to another or when speaking. Even (slight) changes in camera perspective can be made without problems, but with larger changes in perspective the algorithm still tends to create clearly visible artifacts. The method is not yet perfect, but it is a big step on the way to artificial faces suitable for film. deutsche Version dieser Seite: Disney kombiniert CGI mit KI für filmtaugliche Gesichtsanimationen |