[13:16 Sun,27.April 2025 by Thomas Richter] |

Researchers at Stanford University have achieved something amazing: They have released FramePack, a video AI, as open source, which uses a novel architecture that makes it possible for the first time to create AI-generated videos with only 6 GB of graphics card memory. Previously, the generation of high-quality videos via AI usually required at least 12 GB of VRAM. Now, even an Nvidia RTX 3050 or a comparable mid-range GPU is sufficient to generate videos for free on your own PC. In addition, the new method enables a clip length of up to one minute, which is far more than current video AI models that run on your own PC, and also more than most online video AIs.  What makes FramePack different?Traditional video diffusion models consider a growing number of previously generated, still noisy images for each new frame. This "temporal context" scales linearly with the clip length and drives up memory requirements. This method often encounters two problems with longer videos: They tend to "forget" what happened at the beginning of the video, and the image quality deteriorates due to the accumulation of small errors, which is referred to as "drifting."

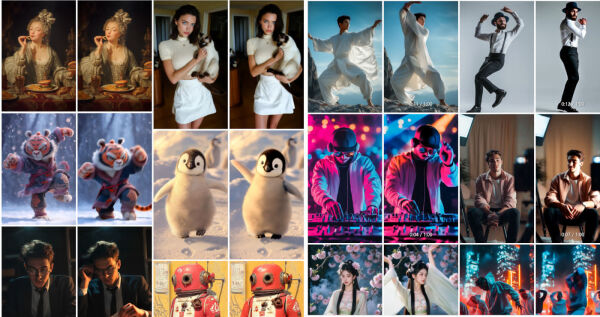

FramePack addresses these challenges by compressing the video images into a fixed context length according to their importance, which drastically reduces the GPU memory requirements. This allows FramePack to process more images without the calculations becoming too complex, which counteracts the "forgetting." In addition, special techniques such as "anti-drifting sampling" are used, which, for example, better capture the context of the entire video in order to minimize drifting and increase visual quality. The computing power required for generating videos should be comparable to that for the individual still images in the diffusion process. A FramePack model with only around 13 billion parameters manages to generate a 60-second clip on a GPU with only 6 GB of VRAM. System requirements and performanceFramePack currently runs on graphics cards from Nvidia&s RTX 30/40/50 series with at least 6 GB of graphics card memory, including the mobile versions of the corresponding GPUs. An Nvidia RTX 4090 generates - optimized via "teacache" - around 0.6 frames per second, with each image being displayed immediately after generation. Nvidia Turing and older generations are officially not verified. AMD or Intel GPUs or Apple&s M-chips are currently not supported. However, you can also use FramePack, for example, via Hugging Face on rented hardware, but this is subject to a fee. Installation on your own PCThe easiest installation option on the local PC is probably QualityFramePack can represent human movements such as dancing very well (which some other AI models have problems with), which can be seen well on the FramePack page using the Bild zur Newsmeldung:

deutsche Version dieser Seite: FramePack: Kostenlose Video-KI generiert 1-Minuten Videos mit nur 6 GB VRAM |