[18:11 Wed,14.July 2021 by Thomas Richter] |

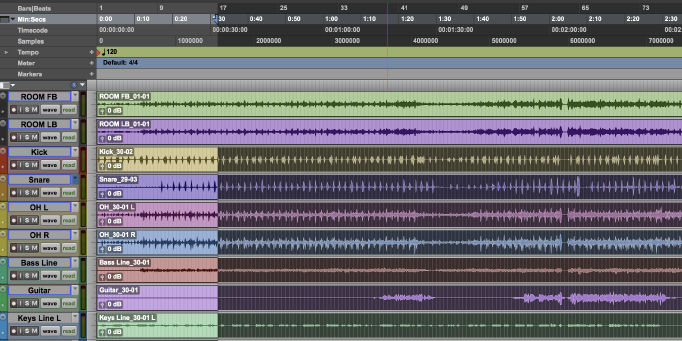

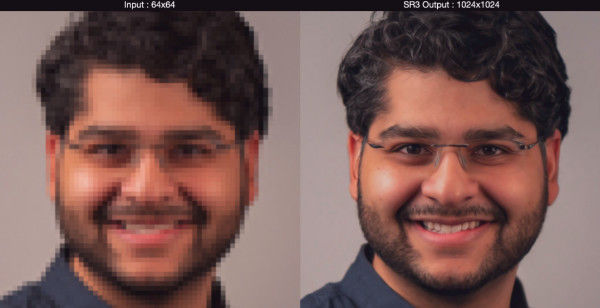

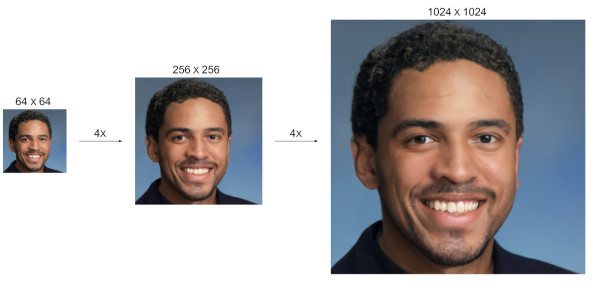

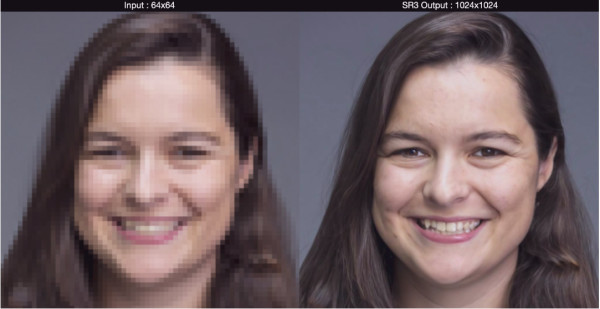

A team of Google researchers has unveiled a new DeepLearning super-resolution algorithm that puts all previously developed methods to shame. Dubbed SR3 (Super-Resolution via Repeated Refinement), the method demonstrates its power especially in examples of upscaled faces: photos of faces with a resolution of only 64 x 64 pixels are upscaled in two steps, first to 256 x 256 pixels, then to 1,024 x 1,024 pixels, which corresponds to a 16x magnification. In another experiment, images of objects such as flowers, fire engines, birds, or buildings are scaled up from 64 × 64 to 256 × 256 pixels.  The high quality of the upscaled images was demonstrated in an experiment: test subjects were asked to decide in a blind test whether the high-resolution original image or the image that was first reduced in resolution and then upscaled again using SR3 looked better - in an 8-fold upscaling from 8 × 8 to 128 × 128 pixels, about half chose the upscaled face.  Upscaling in several steps This corresponds exactly to the random distribution and thus says that it is no longer possible to distinguish between the original and the version generated by Super Resolution. In the much more difficult task of upscaling a 64 x 64 photo of a natural object 4 times to 256 x 256, 40% of the test participants still preferred the generated image to the original. How does Super Resolution even work?Until the last decade, the dogma was that when resolution is increased, no detail can be added that is not in the original image, but for the last few years we have been in a paradigm shift. The buzzword is super-resolution. Super-resolution refers to technologies that add more detail to an image in post-processing than is originally available in digital form. Simply put, they are processes that increase the resolution of an image, using more than just interpolation between known pixels for the "extra pixels." Thus, a super-resolution (SR) application must coherently add details.  More upscaled objects in comparison. With the advent of Deep Learning, Super-Resolution has received a real boost as AI algorithms become very good at recognizing and adding to objects. For example, if an AI has seen millions of faces from different angles and in diverse lighting situations, it can subsequently add learned details to each face in an image. However, this is just as true for plants, cars or animals. Neural networks are trained with many images and thus recognize typical natural structures/patterns - be it those of faces or other objects. When upscaling, this regularity is used - i.e. structures in the low-resolution images are reconstructed in a meaningful way when upscaling. More about this topic in our article  Adobe has such a super-resolution algorithm already The new SR3 algorithm ( deutsche Version dieser Seite: Googles neuer Super-Resolution Algorithmus SR3 skaliert Gesichter nahezu perfekt hoch |