[11:52 Fri,6.December 2024 by Thomas Richter] |

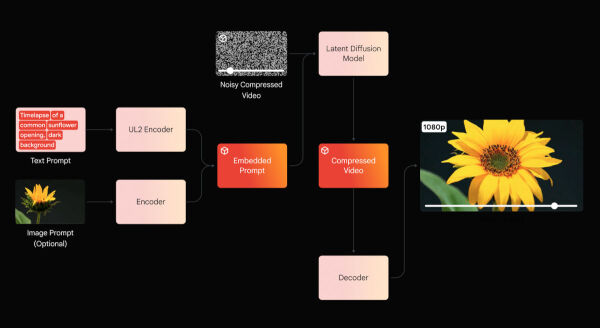

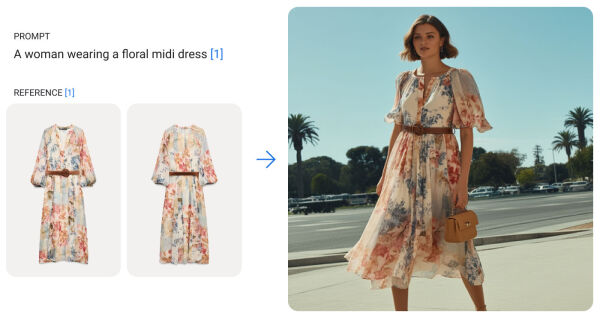

While OpenAI&s former flagship model Sora is still not publicly available, the battle for the best video AI continues unabated. Now Google has released its[Veo high-end video model](www.slashcam.de/news/single/Google-DeepMind-Veo-erzeugt-1080p-Clips-mit-verbes-18586.html) (first introduced in May) along with a new generation of its Imagen image AI in the form of a preview version. However, access is currently limited to a selected group of users via the[VideoFX portal](labs.google/videofx) (unfortunately not yet available in Germany) and business customers via Google&s Vertex AI platform.  What Can Veo Do?Veo supports various cinematic and visual styles, such as aerial or time-lapse shots, and generates videos in 1080p resolution of up to over a minute in length based on text or image prompts—though Google has not provided specific details. Google also promises high consistency, meaning no disruptive morphing of objects or flickering during camera movements or object interactions in the scene. Veo also offers special control tools for AI filmmaking—something that all video AIs strive for to enable creators to implement their ideas precisely. For instance, already-generated videos can be edited in an object-oriented way, such as adding objects afterward, as shown in the following example: Veo can extend generated videos to a length of 1 minute or longer, either using a single prompt or multiple prompts describing an entire sequential narrative, as demonstrated in the following example. This is an unedited direct output from Veo based on the following prompt sequence: - A time-lapse shot through a bustling dystopian neighborhood with bright neon signs, flying cars, and fog, nighttime, lens flare, volumetric lighting - A camera pan through a futuristic, dystopian city with bright neon signs, spaceships in the sky, nighttime, volumetric lighting - A neon hologram of a high-speed car, light-speed, cinematic, incredible detail, volumetric lighting - Cars exit the tunnel back into the real-world city of Hong Kong Veo is also said to have a highly advanced understanding of natural language and visual semantics, enabling it to generate videos that exactly match the desired mood and precise visual details described in the prompt. Google and DeepMind bring their extensive experience from other projects like Generative Query Network (GQN), DVD-GAN, Imagen-Video, Phenaki, WALT, VideoPoet, Lumiere, as well as the Transformer architecture and Gemini to Veo. Interestingly, Google mentions that Veo should generate very quickly due to high-quality but highly compressed video representations. How fast it really is will soon be seen in initial tests.  QualityJudging by the demo clips shown, the quality of object rendering and consistency during movement is very high—you have to look very closely in some of the example videos to recognize that they were generated by AI. However, a final verdict will only be possible once Veo is publicly available, allowing its image quality and prompt implementation to be directly compared with other top video AIs. Veo will then face competition from several Chinese video AIs—especially[Kling 1.5](www.slashcam.de/news/single/Kostenlose-Video-KI-Kling-jetzt-in-Version-1-5-mit-18836.html)—which have already set new standards and surpassed Sora. Rumor has it that Sora will be released publicly this December—the pressure is certainly mounting. It&s striking how reserved American tech giants like Google, Meta (with[Movie Gen](www.slashcam.de/news/single/Neue-Video-KI-Movie-Gen-kommt-mit-Killerfeature-18863.html)), and OpenAI (Sora) are in making their video AIs available to the public, while Chinese companies are far more proactive. They not only make their top models publicly accessible but even offer them partially for free, such as[Kling](www.slashcam.de/news/single/Kostenlose-Video-KI-Kling-jetzt-in-Version-1-5-mit-18836.html),[Vidu](www.slashcam.de/news/single/Vidu-1-5-generiert-Videoclips-aus-bis-zu-3-vorgege-18940.html), or[MiniMax/Hailuo](www.slashcam.de/news/single/Neue-Video-KI-MiniMax-meistert-menschliche-Bewegun-18781.html), or even as open-source models for personal computers, like[Genmo’s Mochi 1](www.slashcam.de/news/single/Genmo-Mochi-1---neue-Open-Source-Video-KI-will-mit-18898.html),[Pyramid Flow](www.slashcam.de/news/single/Pyramid-Flow---Neue-Open-Source-Video-KI-generiert-18875.html), or[Tencent’s Hunyuan](www.slashcam.de/news/single/Tencent-Hunyuan---Offenes-KI-Videomodell-soll-aktu-18979.html). No Automatic Sound Effects or Music YetGoogle DeepMind&s[video-to-audio functionality](www.slashcam.de/news/single/Google-DeepMind-wird-Videos-mit-automatisch-erzeug-18647.html), announced in June, which enables the addition of suitable music, sound effects, or even dialogue to videos based on visual content and prompts, was not mentioned. However, this feature is expected to find its way to Veo soon. Imagen 3The latest version of Google&s image generator, Imagen 3, will be available to all Google Cloud customers starting next week. Some customers will also have access to special features such as photo editing via prompts, in- and outpainting, and the ability to incorporate specific styles, logos, or products into generated images—for example, applying a dress to a virtual model via prompt, as shown below:  Safety and CopyrightGoogle emphasizes that both Veo and Imagen 3 include built-in safeguards to prevent the generation of copyrighted or prohibited content. Similar to[Adobe&s Content Credentials System](www.slashcam.de/news/single/KI-oder-echt----Adobe-startet-Content-Credentials--18204.html), Google marks all AI-generated content—including future audio—with an invisible watermark using SynthID, ensuring that it can be identified as AI-generated. deutsche Version dieser Seite: Google Veo am Start - neue Video-KI macht über 60 Sekunden lange Clips |