Anyone who is somewhat versed in common image compression methods probably regularly thinks that now, however, the end of the line must have been reached. If you look with the naked eye at the high-resolution image quality that can be stored in just a few MB these days, you are even more amazed when you know what digitally compressed images with the same amount of memory looked like 20 years ago.

But of course, artificial intelligence is now entering the research field of digital image compression, and Google's research teams have been at the forefront of this for years.

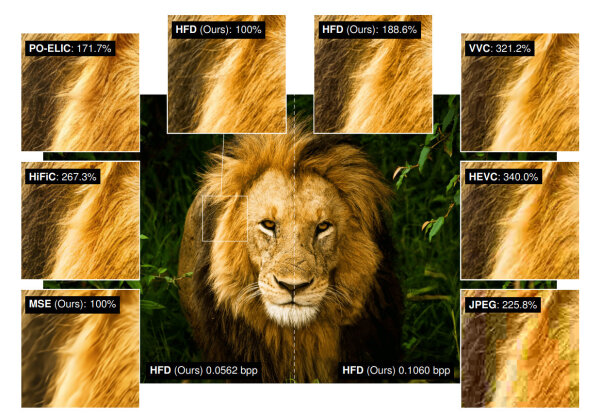

The currently enormously hip generative AI diffusion models are interestingly also suitable for image compression, but this has received little attention so far. Google researchers have now proposed a new method that combines a standard autoencoder optimized for mean square error with a diffusion process to recover and add fine details discarded by the autoencoder.

In our own tests, this allowed finely tuned diffusion models to outperform many other current generative approaches in terms of image quality as image compressors.

As is often the case with diffusion models, the results are highly dependent on the noise used. Unlike text-image models such as Stable Diffusion, training the diffusion process for compression tends to benefit from less noise, since the coarse details are already adequately captured by the autoencoder reconstruction.

For those who do not want to read the  Arxiv paper, see

Arxiv paper, see  here is a somewhat simplified representation of the process.

here is a somewhat simplified representation of the process.

The whole project is certainly still a long way from a practical implementation. However, it is also certain that we will see more effective compression methods in the future.