[10:52 Fri,2.September 2022 by Thomas Richter] |

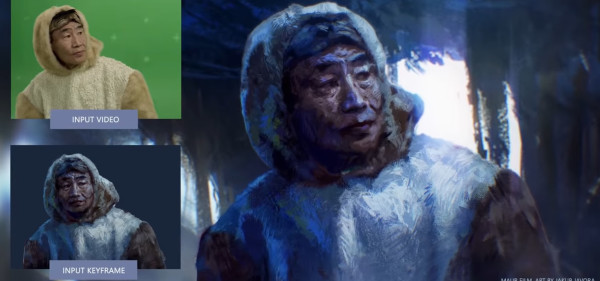

The artist Karen X. Cheng shows in a small demo video which creative possibilities are already possible with the help of various free AI tools. The clip shows her taking a few steps while her AI-generated clothing transforms several times into a different style - a time-lapse fashion show, so to speak.  She generated the new clothes per specific prompts using the in-painting function in The solution brought the AI tool The final tool used was  EBSynth InputVideo - Keyframe and Result The whole thing is just a small experiment, but it shows how the combinations of several AI tools can create interesting effects that would have been possible without these tools, too, but would have been significantly more complex to realize. From simply creating fitted clothing for a person at the touch of a button after only a brief description, to transferring that change to multiple moving images, to a smooth transformation from one outfit to another - all things that would have taken a lot of time manually and are now easily repeatable in variations at any time thanks to the AI workflow. We also came across another interesting deutsche Version dieser Seite: Wie man eine animierte Modeschau einfach mit kostenlosen KI Tools produziert |