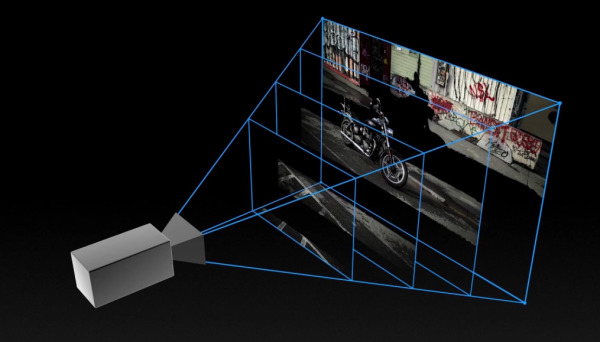

The project "Local Light Field Fusion: Practical View Synthesis with Prescriptive Sampling Guidelines" calculates new 3D views of an object from just a few photos and displays them in real time on a smartphone, for example. This method is also based on deep learning, it uses neural networks to create new views of an object in 3D using some photos from a calculated local light field.

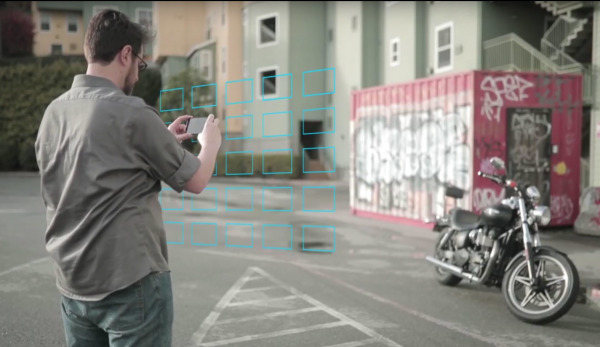

The algorithm is able to determine the number of required photos from slightly different perspectives and to assist the user interactively with the shooting of the required photos via augmented reality smartphone app. The positions from which photos still have to be taken are displayed in order to enable a 3D partial view.

Deep learning first extrapolates several depth levels from each photo, from the totality of which local light fields can be calculated, which then enable the user to view an object from slightly different perspectives in real time, mobile or on the desktop, thus making it appear three-dimensional. On the project's website, the corresponding program code is also available to generate these 3D views from a demo-image set and view them interactively.