[15:08 Sat,30.November 2024 by Rudi Schmidts] |

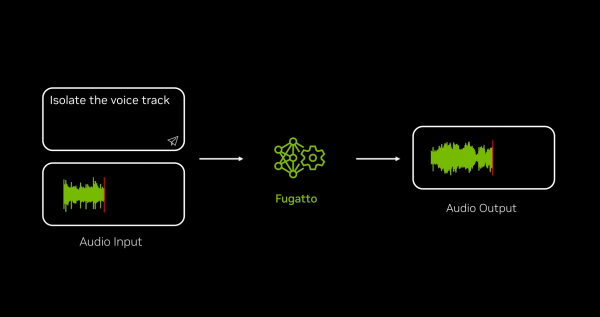

Nvidia has developed a kind of Swiss army knife in the field of generative audio AI.  In contrast to highly specialized models, Fugatto as a foundation model masters numerous, highly diverse tasks for audio generation and transformation and, according to Nvidia, is the first basic model of generative AI that has emergent properties. These are capabilities that result from the interaction of its various trained capabilities. And thus also the ability to combine freely formulated instructions. For example, music producers could use Fugatto to quickly prototype or edit a song idea, trying out different styles, voices and instruments. They could also add effects and improve the overall audio quality of an existing track. An advertising agency could use Fugatto to quickly target an existing campaign to multiple regions or situations, adding different accents and emotions to the voiceovers. Voice learning tools could be personalized to use any voice desired by the speaker. Video game developers could use the model to change pre-recorded assets in their title to match the changing storyline as users play. Or they could create new assets on the fly from text instructions and optional audio input. One of the model&s capabilities that the developers are particularly proud of is what has been called an avocado chair in the field of Generative Image AI. For example, Fugatto can make a trumpet bark or a saxophone meow. Whatever users can describe, the model can create it. By fine-tuning with a little vocal data, it should even be possible to create a high-quality singing voice from a text prompt. During inference, the model uses a technique called ComposableART to combine prompts that were only displayed individually during training. For example, a combination of prompts might ask for text spoken with a sad emotion and French accent. The model&s ability to interpolate between prompts gives users fine-grained control over text prompts, in this case the strength of the accent or level of sadness. The model can also generate sounds or soundscapes that change over time (temporal interpolation). And unlike most models, which can only reproduce the training data they have been exposed to, Fugatto even allows users to create unprecedented soundscapes, such as a thunderstorm fading into dawn accompanied by the sound of singing birds. As yet, there is only a deutsche Version dieser Seite: Nvidia zeigt Fugatto - KI-Sounddesigner, Audiotool und Mitmusiker |