[14:39 Mon,29.July 2019 by Thomas Richter] |

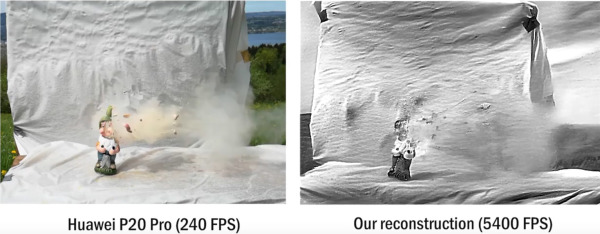

Human cognition functions quite differently from cameras: instead of a whole image per unit of time, the visual changes of the outside world are constantly transmitted as stimuli in the perception. The novel "event cameras", which use completely different sensors than previous cameras and record changes in brightness in the form of a stream of asynchronous "events", work in a similar way. They offer considerable advantages over conventional cameras: low latency, high temporal resolution, high dynamic range (HDR), no motion blur and a small amount of data to be stored.  However, the method also has a disadvantage: no pictures are taken in the conventional sense. In order to extract traditional images from the recording stream, they must first be reconstructed. A team from ETH Zurich and the University of Zurich has now done this with new efficiency: traditional images are reconstructed from the event image data using machine learning. This happens at up to 5,400 images per second in real time and in a significantly higher image quality than before. Event cameras and the new algorithm would enable new, low-cost high-speed cameras in the future and better cameras for machine applications (such as autonomous drones - see video below). Of course, the whole thing also works in color. Rapid reaction of a drone using an event camera: deutsche Version dieser Seite: Super-Zeitlupe per Kamera, die wie die menschliche Wahrnehmung funktioniert |