[16:25 Sun,11.September 2022 by Thomas Richter] |

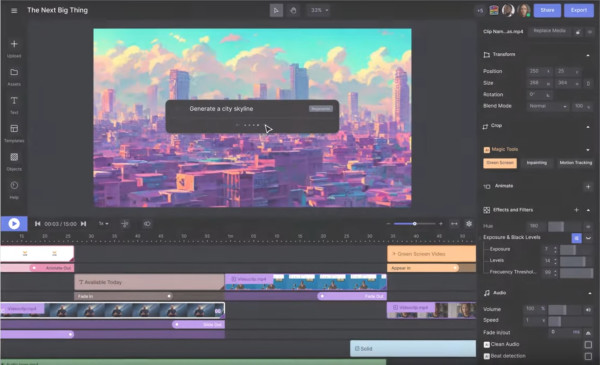

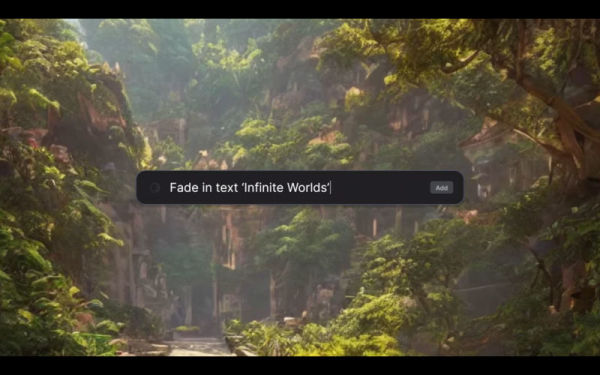

An interesting video clip is making the rounds right now that may show the future of film production. The teaser, produced by Runway ML, demonstrates how videos can be edited and produced using text-only descriptions. It shows how a series of text inputs first import a dynamic street scene and give it a FilmLook, after which an object is roughly marked with a brush and seamlessly removed from the scene. This demonstrates various AI techniques that are already known: the generation of images, the targeted seamless removal of objects in a video, the generation of backgrounds, the masking of objects including keying - all through text commands. The clip suggests that all these techniques also work in video editing, although it is of course not clear from the teaser how far the video-specific functions actually go, and whether the clip was really generated by the techniques mentioned or the targeted range of functions was only simulated via compositing. The big step towards AI-based generation of entire video sequences does not seem to have been taken yet, because the video sequence at the beginning is explicitly imported and not created. However, since the clip comes from Runway ML, it can be assumed that the corresponding possibilities of text-controlled and object-based editing via AI will soon be available for video as well. Green screen, inpainting and motion tracking are already part of the functionality of Runway&s online video editor, so text commands would be the main new feature.  Online Video Editor interface And even if the image quality and resolution should not be too high at first (stable diffusion and similar algorithms only work on computationally expensive detours with high-resolution images and often there are still - especially with humans - problems with the correct display of generated bodies), an absolute revolution in video production is just around the corner - faster than expected. Runway ML (Machine Learning) has been offering the online video editor with numerous AI functions for several years now. Part of the team is, among others, the Heidelberg-based AI researcher Patrick Esser, who also played a major role in the development of the AI image generator You can currently sign up for a closed beta phase to soon be able to test the text-to-video functions demonstrated in the clip in the online video editor. We are extremely excited.   deutsche Version dieser Seite: Video-Editing per Textkommando: Nach Text-zu-Bild kommt Text-zu-Video KI |