[19:18 Thu,14.November 2024 by blip] |

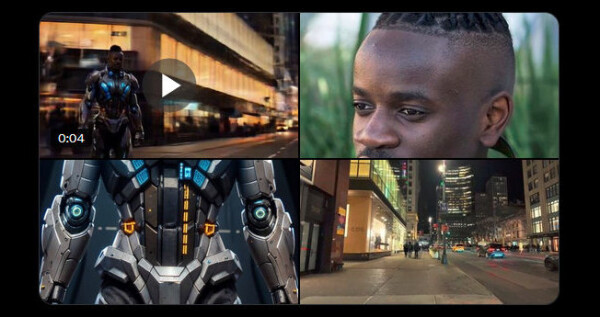

The AI model behind the Chinese multimodal video generator Vidu has been further developed - according to Shengshu Technology, the new version 1.5 brings “multi-entity consistency”. This means that the model is able to interweave images of different objects, figures or environments to create a meaningful video. This in turn means that you gain more control over the content created when generating AI clips by being able to specify content components.

If, for example, images of a person, a certain item of clothing and a moped are uploaded as a reference, Vidu 1.5 generates a video in which this person is dressed as desired and riding a moped - according to the developers, this functionality is currently not found in any other AI video model. In addition, the reference objects (or characters and environments) in the generated video should be displayed consistently and not, as is so often the case in AI videos, gradually morph into one another or dissolve.

Another new feature is the ability to ensure a consistent figure representation from different angles - if three photos of a person are given, Vidu 1.5 should be able to calculate a seamless 360° view and naturally reproduce different facial expressions. Extended control should also be possible over the desired (virtual) camera movement, so that horizontal and vertical pans as well as zoom movements, also in combination with each other, can be generated for sophisticated settings. New animation styles have been added for cartoon fans, such as Japanese fantasy or hyperrealism. Image details should now be generated more accurately in the clips created by Vidu 1.5, the maximum resolution is 1080p as before. At the same time, it should also be faster - 25 seconds of computing time should be sufficient to generate 4 seconds of moving images. Thanks to advances in semantic understanding, the new AI model should interpret text prompts more precisely than before, so that even complex scenes can be implemented. More control over the image and better visual consistency are also currently being sought in competing video AIs. For example, a major new feature in deutsche Version dieser Seite: Vidu 1.5 generiert Videoclips aus bis zu 3 vorgegebenen Bildinhalten |