[09:20 Tue,25.May 2021 by Thomas Richter] |

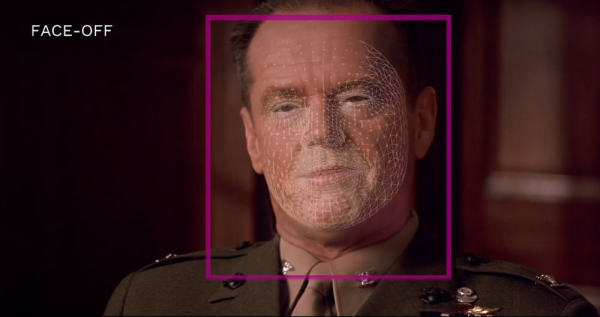

The London-based startup Flawless AI wants to make dubbed films look better by using AI to adapt the lip movements of the person speaking to the text of the voice actor. We had already reported last year about the DeepLearning algorithm "Wav2Lip" of an Indian research team Similarly, Flawless AI&s TrueSync christened  Flawless AI&s True Sync Algorithm.According to its own claims, it works fororealistically and lip sync is so seamless that the actor&s particular performance is preserved in detail and viewers are no longer distracted by inconsistencies between lip movements and what is spoken. Demo of Flawless AI&s True Sync algorithm: For this purpose, the algorithm, which is based on a Interesting for major movie studios, Netflix and more.Flawless AI, as can already be seen in the demo video, is targeting major film and series productions and is working with film studios such as Paramount, with Flawless AI&s co-founder, filmmaker Scott Mann, who, among other things, made the 2015 film "Heist" With Robert De Niro, providing the contacts. The first major film to use the method is scheduled for release in about a year. In addition to the major film studios, a lip-sync algorithm would also be highly interesting for streaming providers such as Netflix or Amazon Prime, which could then automatically (and relatively inexpensively) visually adapt the many foreign-language versions of their films and series to the respective spoken language. Of course, a lip-sync algorithm would also be interesting on a smaller scale, such as for filmed lectures, press conferences, or animated films in other languages.  In addition to filmmakers and viewers, translators of dubbed texts would also benefit, as their work would be made much easier if they no longer had to ensure that the spoken text of the translation did not deviate too much from the lip movements of the original. This is because the dialog is often heavily altered during dubbing in order to better adapt it to the lip movements of the actors, which, however, can distort its meaning very much. However, even with lip-syncing via Ki, the length of the text or the timing would of course have to be exactly right. For filmmakers, the technology also offers a simple alternative to costly reshoots when the spoken text of a scene needs to be changed after the fact. Automatic foreign language versions on YouTube?In the future, it would also be conceivable to offer other-language versions of any clips on YouTube, for example, completely automatically. After all, YouTube already provides an automatic transcription including subtitles, and the next steps are also already possible with the help of various Deep Learning algorithms: translating the transcribed text into another language, speech synthesis with the voice of the original, and then lip-syncing the video with the new audio. Caveat.In theory, however, the technique can of course be abused to generate clips in which people realistically appear to say things they never said - the new audio can also be generated by neural network to mimic the real voice. deutsche Version dieser Seite: Visuelles Dubbing per KI macht jeden Schauspieler zum Mehrfach-Muttersprachler |