W.A.L.T. for moving images no longer just stands for Disney, but for a transformer-based approach to photorealistic AI video generation using diffusion modeling.

Google's new model for generative AI videos is based on two pillars: a uniform latent space that has been trained with images AND videos. And an attention mechanism that processes not only local (spatial) but also temporal (spatiotemporal) tokens.

Put very simply: Time, or points in time, also become learning parameters in this model. This enables W.A.L.T. in the first version to generate text-to-video with a resolution of 512 x 896 at 8 frames per second.

W.A.L.T. - Photorealistic Video Generation with Diffusion Models

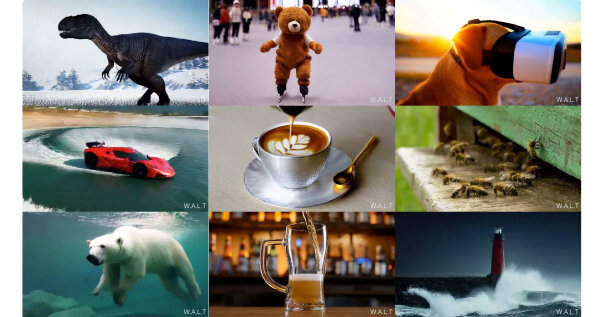

Even though the expectations for AI-generated video are now extremely high, the demo clips shown here are not really photorealistic - at least not in a professional sense. Nevertheless, compared to previous SOTA (State-Of-The-Art) models, the clips show a few features that have not yet been seen in this form.

The videos shown are impressively consistent over time and can reproduce even relatively complex motion sequences without errors in some cases. Even peripheral motifs and backgrounds are only plagued by a few unintentional changes.

In short, it may not be a milestone, but at least it is another solid step towards photorealistic AI video generation.

To form your own opinion of W.A.L.T., Google has launched its own  website with sample videos - which of course only reveals the most successful results of the W.A.L.T. model. But this is also the case with presentations of other, competing AI models. So you can certainly take a few minutes to marvel at the new results.

website with sample videos - which of course only reveals the most successful results of the W.A.L.T. model. But this is also the case with presentations of other, competing AI models. So you can certainly take a few minutes to marvel at the new results.